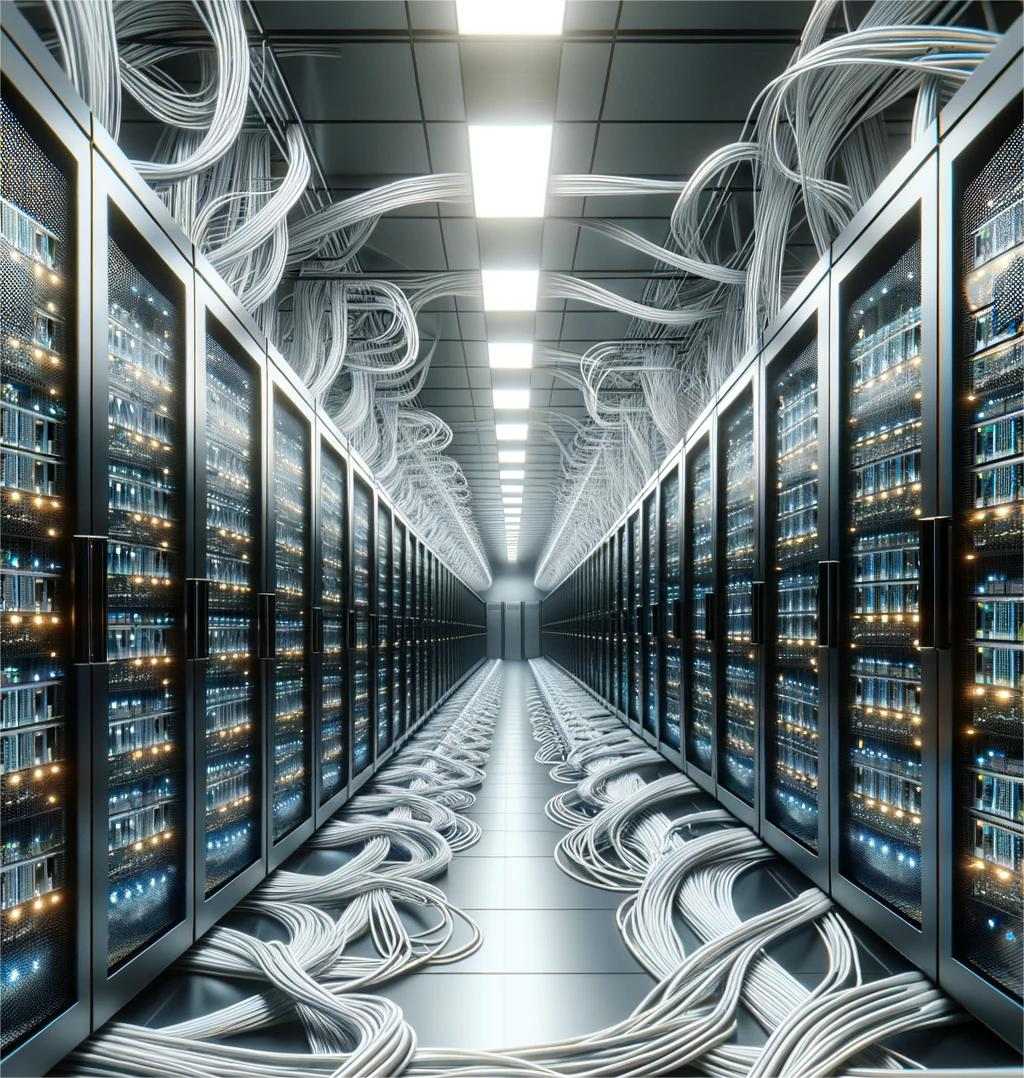

A modern cloud, purpose-built for cutting edge AI

MegaSpeed.Ai provides access to the industry’s broadest range of NVIDIA GPUs, so you can scale across the compute that meets the complexity of your workloads. Our Kubernetes-native infrastructure delivers lightning quick spin-up times, responsive auto-scaling, and modern networking architecture to ensure that performance scales with you.

Right-size your workloads

Bare metal performance via Kubernetes

Full stack machine learning expertise

A scalable, on-demand infrastructure to train, fine-tune and serve models for any AI application, with a massive scale of highly-available GPU resources at your fingertips. Need support? Our clients often view our DevOps and infrastructure engineers as an extension of their own.

Fastest spin up times and most responsive auto-scaling

MegaSpeed.Ai delivers the industry’s leading inference solution to help you serve models as efficiently as possible, with proprietary auto-scaling technology and spin up times in as little as 5 seconds. Data centers across the country minimize latency, and deliver superior performance for end users.

State of the art distributed training clusters

We build our A100 distributed training clusters with a rail-optimized design using NVIDIA Quantum InfiniBand networking and in-network collections using NVIDIA SHARP to deliver the highest distributed training performance possible.

Realize the benefits of bare metal without having to manage the infrastructure

We built MegaSpeed.Ai Cloud with engineers in mind. GPUs are accessible by deploying containerized workloads via Kubernetes, for increased portability, less complexity and overall lower costs. Not a Kubernetes expert? We’re here to help.