NVIDIA HGX H100

7x better efficiency in high-performance computing (HPC) applications, up to 9x faster AI training on the largest models and up to 30x faster AI inference than the NVIDIA HGX A100. Yep, you read that right.

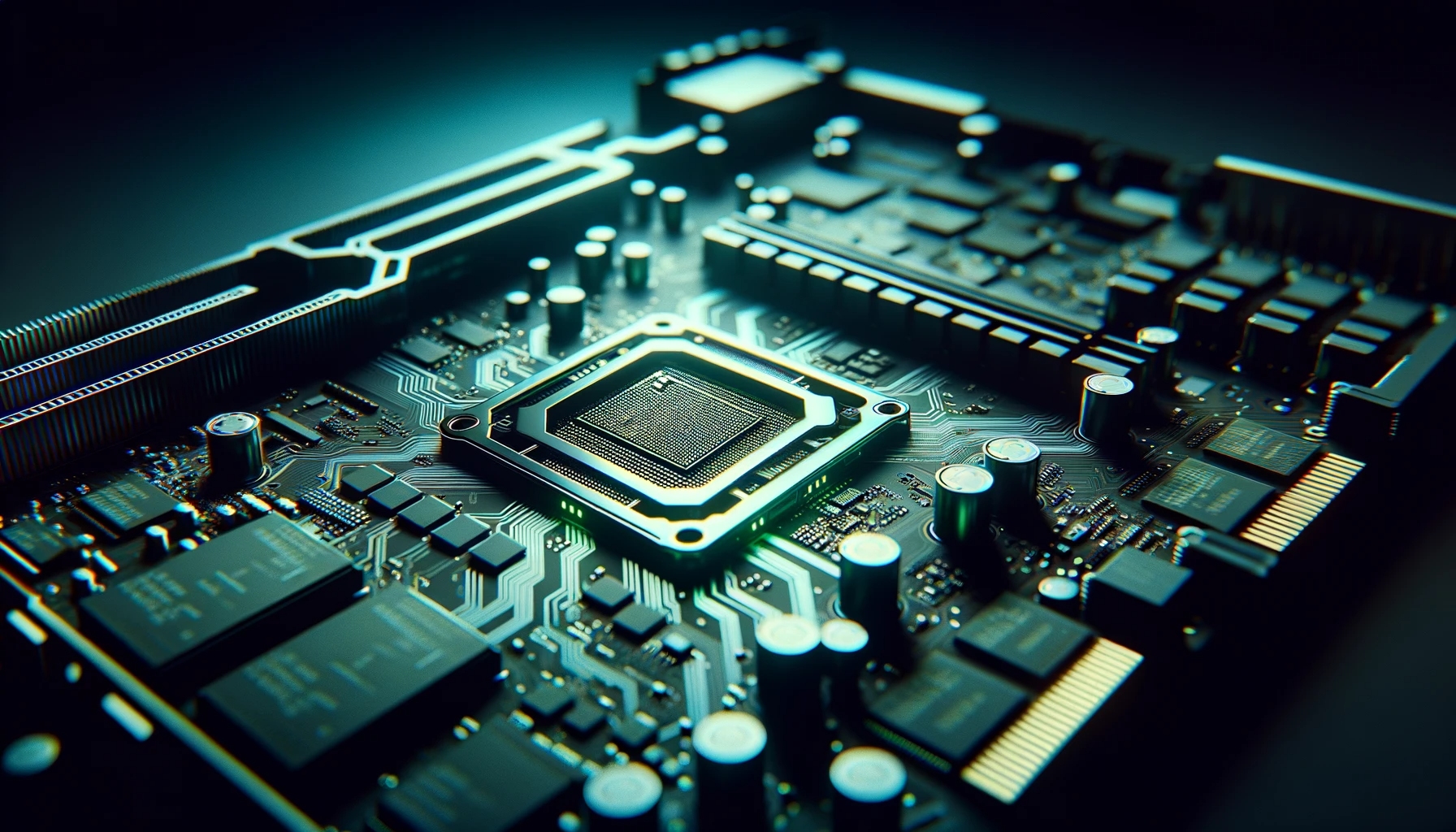

Fast, flexible infrastructure for optimal performance

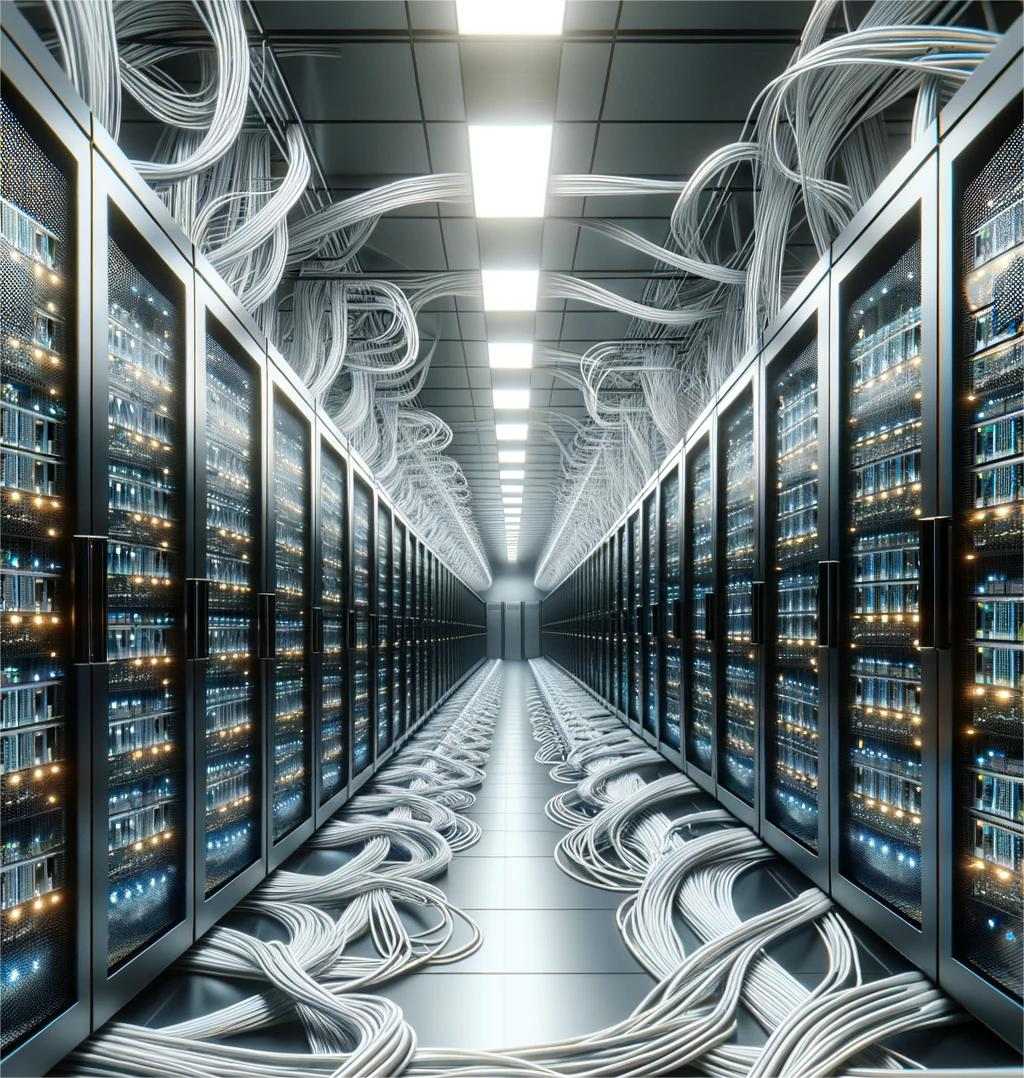

Superior networking architecture, with NVIDIA InfiniBand

Easily migrate your existing workloads

Tap into our state-of-the-art distributed training clusters, at scale

MegaSpeed.Ai's HGX H100 infrastructure can scale up to 16,384 H100 SXM5 GPUs under the same InfiniBand Fat-Tree Non-Blocking fabric, providing access to a massive scale of the world's most performant and deeply supported model training accelerators.

Our infrastructure is purpose built to solve the toughest AI/ML and HPC challenges. You gain performance and cost savings via our bare-metal Kubernetes approach, our high capacity data center network designs, our high performance storage offerings, and so much more.

Avoid rocky training performance with MegaSpeed.Ai’s non-blocking GPUDirect fabrics built exclusively using NVIDIA InfiniBand technology.

MegaSpeed.Ai’s NVIDIA HGX H100 supercomputer clusters are built using NVIDIA InfiniBand NDR networking in a rail-optimized design, supporting NVIDIA SHARP in network collections.

Training AI models is incredibly expensive and our designs are painstakingly reviewed to make sure your training experiments leverage the best technologies to maximize your compute per dollar.

Scratching your head with on-prem deployments? Don’t know how to optimize your training setup? Utterly confused by the options at other cloud providers?

MegaSpeed.Aidelivers everything you need out of the box to run optimized distributed training at scale, with industry leading tools like Determined.AI and SLURM.

Need help figuring something out? Leverage MegaSpeed.Ai’s team of ML engineers at no extra cost.

Highly configurable compute with responsive auto-scaling

No two models are the same, and neither are their compute requirements. With customizable configurations, MegaSpeed.Ai provides the ability to “right-size” inference workloads with economics that encourage scale.

Flexible storage solutions with zero ingress or egress fees

Storage on MegaSpeed.Ai is managed separately from compute, with All NVMe, HDD and Object Storage options to meet your workload demands.

Get up to 10,000,000 IOPS per Volume on our All NVMe Shared File System tier, or leverage our NVMe accelerated Object Storage offering to feed all your compute instances from the same storage location.