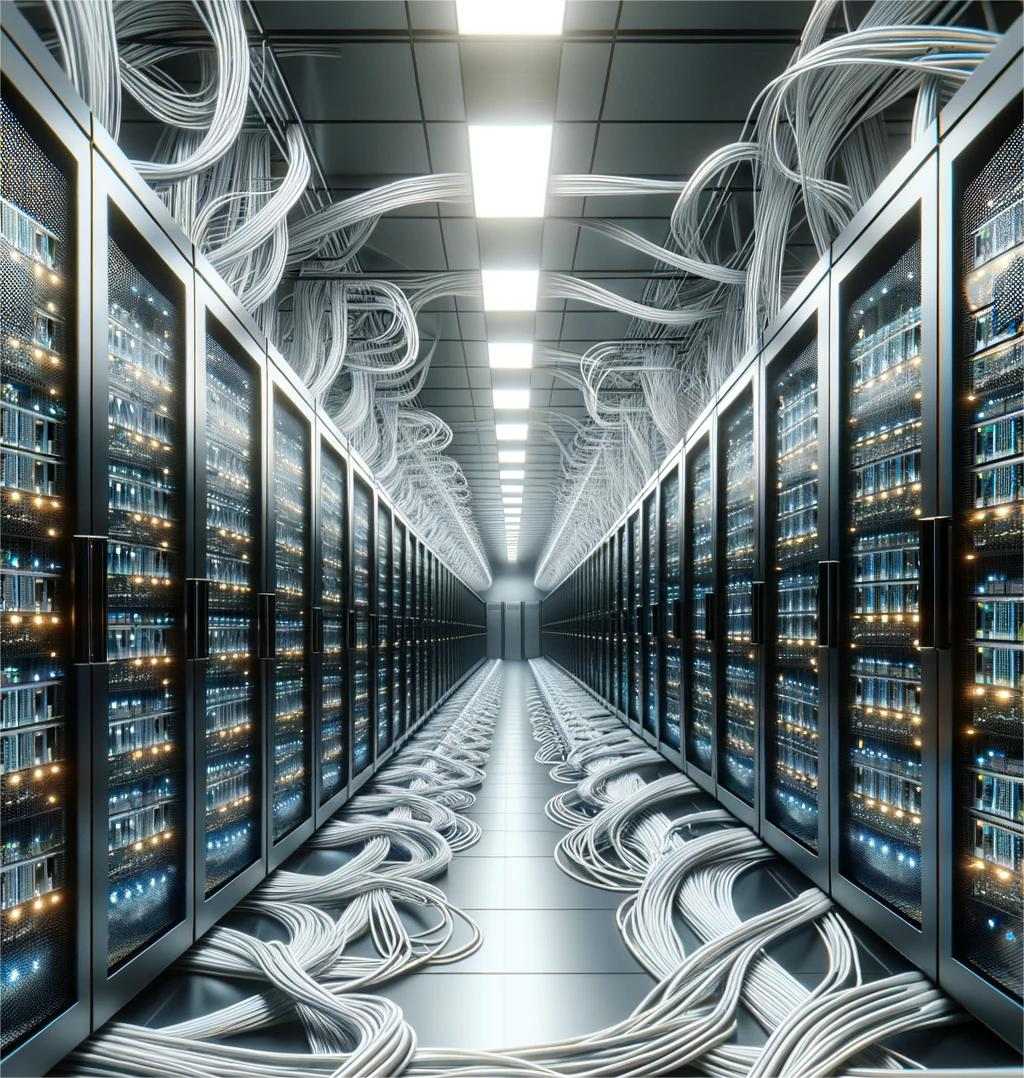

A modern infrastructure, built for compute-intensive workloads

With all resources managed by a single orchestration layer, our clients take full advantage of increased portability, less overhead, and less management complexity compared to traditional VM-based deployments.

Blazing fast spin-up times. Responsive auto-scaling.

Instant gratification

Independent control

Standards-based inference platform with industry-leading scalability

Deploy inference with a single YAML. We support all popular ML Frameworks: TensorFlow, PyTorch, SKLearn, TensorRt, ONNX as well as custom serving implementations. Optimized for NLP with streaming responses and context aware load-balancing.

Industry standard architecture, designed to deliver the best possible performance

We build our distributed training clusters with a rail-optimized design using NVIDIA Quantum InfiniBand networking and in-network collections using NVIDIA SHARP to deliver the highest distributed training performance possible.

Accelerate artist workflows by eliminating the render queue

Leverage container auto-scaling in render managers - like Deadline - to go from a stand-still to rendering a full VFX pipeline in seconds.

Run thousands of GPUs for parallel computation

Leverage powerful Kubernetes native workflow orchestration tools like Argo Workflows to run and manage the lifecycle of parallel processing pipelines for VFX rendering, health sciences simulations, financial analytics and more.