Faster spin-up times. More responsive autoscaling.

From optimized GPU usage and autoscaling to sensible resource pricing, we designed our solutions to be cost-effective for your workloads. Plus, you have the flexibility to configure your instances based on your deployment requirements.

Bare-metal speed and performance

Scale without breaking the bank

No fees for ingress, egress, or API calls

MegaSpeed.Ai Inference Service offers a modern way to run inference that delivers better performance and minimal latency while being more cost-effective than other platforms.

Optimize GPU resources for greater efficiency and less costs.

Autoscale containers based on demand to quickly fulfill user requests significantly faster than depending on scaling of hypervisor backed instances of other cloud providers. As soon as a new request comes in, requests can be served as quickly as:

· 5 seconds for small models

· 2.10 seconds for GPT-J

· 3.15 seconds for GPT-NeoX

· 4.30-60 seconds for larger models

Deploy models without having to worry about correctly configuring the underlying framework.

KServe enables serverless inferencing on Kubernetes on an easy-to-use interface for common ML frameworks like TensorFlow, XGBoost, scikit-learn, PyTorch, and ONNX to solve production model serving use cases.

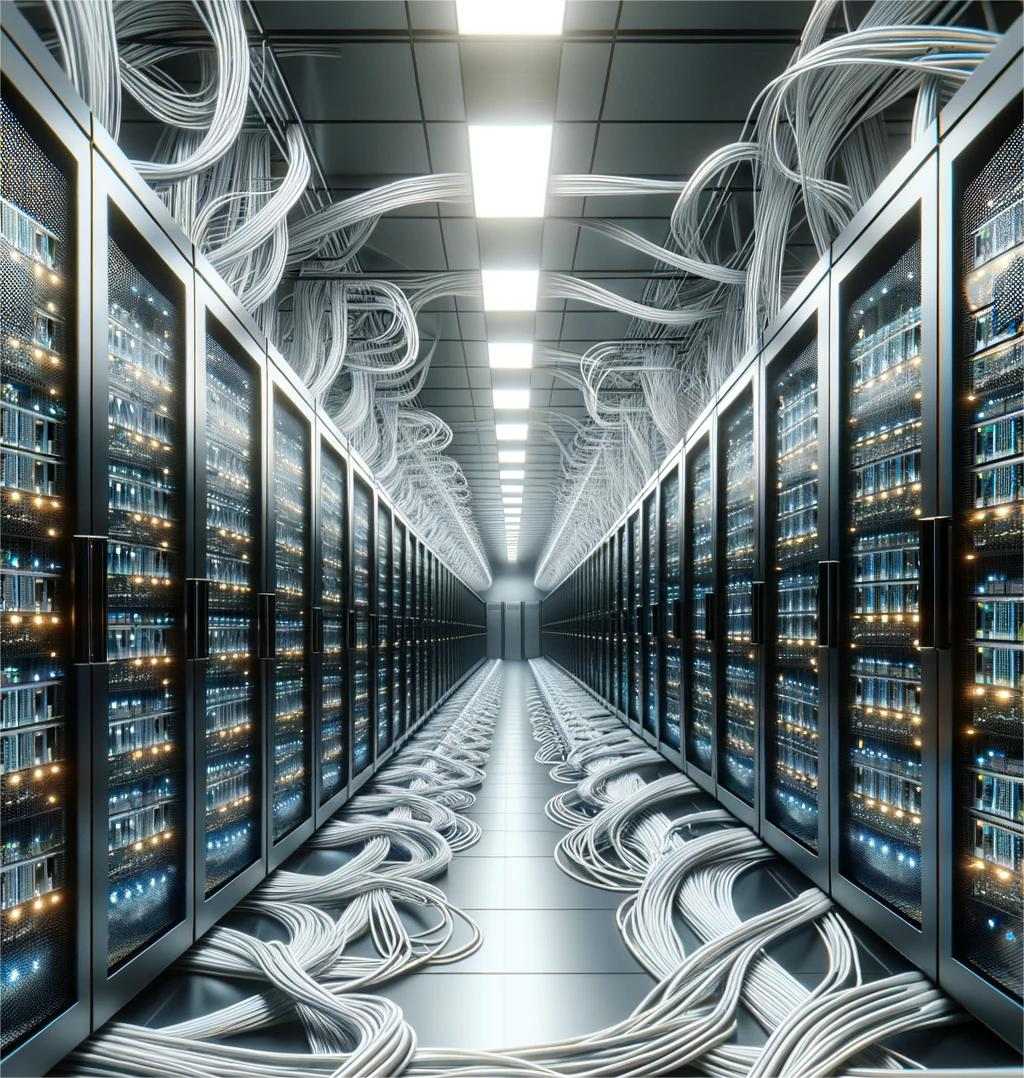

Get ultramodern, high-performance networking out-of-the-box.

MegaSpeed.Ai's Kubernetes-native network design moves functionality into the network fabric, so you get the function, speed, and security you need without having to manage IPs and VLANs.

· Deploy Load Balancer services with ease

· Access the public internet via multiple global Tier 1 providers at up to 100Gbps per node

· Get custom configuration with MegaSpeed.Ai Virtual Private Cloud (VPC)

Easily access and scale storage capacity with solutions designed for your workloads.

MegaSpeed.Ai Cloud Storage Volumes are built on top of Ceph, an open-source software built to support scalability for enterprises. Our storage solutions allow for easy serving of machine learning models, sourced from a range of storage backends, including S3 compatible object storage, HTTP and a MegaSpeed.Ai Storage Volume.